Updated December 2025

Forecasting used to be a spreadsheet exercise, a roll-up ritual, and a weekly battle between optimism and reality. But 2026 is different. Revenue teams aren’t just looking for a number anymore they’re looking for a system that understands the business as well as they do.

The shift is subtle but transformative: forecasts no longer depend solely on CRM fields, stage probabilities, and rep judgment. They increasingly rely on the truths hiding in conversations, usage patterns, buying signals, and quiet moments of risk that never show up in Salesforce.

This guide breaks down what AI forecasting really is, when it actually works, what data matters, and how to operationalise it without overwhelming your team. Consider this the handbook you wish you had before your last QBR.

By the end, you’ll know exactly how to explain AI forecasting to anyone, how to evaluate your readiness, and how tools like ForecastIQ keep the process grounded in reality rather than hope.

Why Forecasting Changed and Why 2026 Made AI Go From “Nice” to Necessary

If you’ve been running forecasts for more than a year, you already feel the shift.

Buying committees keep growing. Champions leave in the middle of cycles. Renewal and expansion signals now depend on usage behavior that reps rarely track. And most leaders are expected to be more accurate with leaner teams and fewer pipeline safety nets.

The old approach look at your CRM, apply stage math, collect commits, pray simply doesn’t cut it anymore.

AI didn’t become useful because it’s “cool.” It became useful because the revenue engine became too complex for humans to monitor manually.

Good RevOps leaders already know this: the more your GTM motion depends on buyer engagement, adoption, and repeatable patterns, the more you need a system that watches all those signals continuously.

That’s where AI forecasting fits in.

What AI Revenue Forecasting Actually Is

Here’s the simple version:

AI revenue forecasting is a constantly-updating prediction of revenue based on how deals actually behave, not how they are supposed to behave.

It looks at patterns in:

- buyer engagement

- product usage

- deal momentum

- stakeholder involvement

- historical conversion behavior

…..and adjusts in real time as those signals change.

Think of it as the forecasting partner who never gets tired, never forgets a signal, and never overstates the size of a deal because they “have a good feeling about it.”

The best part?

It doesn’t replace human judgment it simply grounds it in reality :)

Where AI Sees What Humans Don’t

Every revenue leader has lived these moments:

A rep swears a deal is fine.

An enterprise buyer says “Let’s regroup next week.”

A renewal looks stable…. until usage quietly drops 40%.

Humans catch some of this. but AI catches all of it.

1. Seasonality with nuance

Humans say: “Q4 is always big.”

AI says: “Q4 is big when the early quarter pipeline is strong and expansion usage hits a certain threshold.”

2. Silent pipeline decay

Reps keep deals green until the last moment.

AI sees 10 days of no responses and slows its probability down long before the forecast call.

3. Champion risk

Humans may not notice a new stakeholder entering late in the cycle.

AI sees the shift in email patterns and meeting dynamics.

4. Usage-driven churn or expansion

Humans read renewal signals mostly from relationship quality.

AI evaluates real-time usage curves against historical churn patterns.

This is the magic -

AI doesn’t work because it’s smart it works because it pays attention to everything humans don’t have time to track.

When AI Forecasting Works and When It Doesn’t

Let’s be honest AI forecasting only works if your motion produces enough repeatability and enough signal.

If you have meaningful deal volume, at least a year of consistent data, and a revenue process that repeats quarter after quarter, AI can dramatically improve accuracy.

If you’re early stage, still figuring out ICP, or dealing with chaotic CRM data… the model is only as strong as the inputs. You’ll be better served with simplified stage-weighted forecasting and scenario planning until your foundation stabilizes.

Here’s the gut-check most RevOps leaders use:

- Do we have enough data for the model to learn anything useful?

- Is our sales motion repeatable, or are we reinventing the wheel every quarter?

- Are we willing to let the model be a second opinion not a replacement for human judgment?

If the answer is “yes” then AI isn’t just helpful; it becomes a competitive advantage.

The Data That Actually Matters (And the Stuff Everyone Overthinks)

You don’t need perfect data. You need consistent data.

The fundamentals still hold: amount, stage, owner, product, close dates, deal age. But what separates good forecasts from great ones are engagement and usage signals that tell the real story of the deal.

- If a buyer hasn’t replied in two weeks, the model sees it.

- If the champion isn’t on renewal calls, the model registers it.

- If usage is dipping ahead of QBRs, AI takes note.

This is the kind of data humans intend to track but rarely do.

Usage is becoming the new pipeline, especially in consumption and expansion-heavy SaaS motions. A model that can read those patterns has a real shot at predicting renewals and expansions without the optimism tax.

If you have at least 12 months of good history and a clean segmentation structure, you’re already ahead of most teams.

Choosing a Forecasting Approach That Fits Your Motion

Not every business forecasts the same way and that’s a good thing. The key is choosing a method that matches your reality, then letting AI enhance it rather than bulldoze it.

Here’s how operators think:

1. If you’re SMB or PLG

Volume and velocity matter more than individual deals. AI shines by catching seasonal spikes, usage-driven buying behavior, and momentum trends you’d never see manually.

2. If you’re enterprise

Deals are fewer, larger, and full of stakeholders. AI helps track the pace, engagement, and politics that stage fields ignore. Deal inspection matters but AI makes inspection honest.

3. If you’re consumption-based

AI becomes almost unfair. Usage curves reveal more about renewal likelihood and expansion potential than any self-reported data.

The point is simple - AI works best when it enhances your current process, not replaces it.

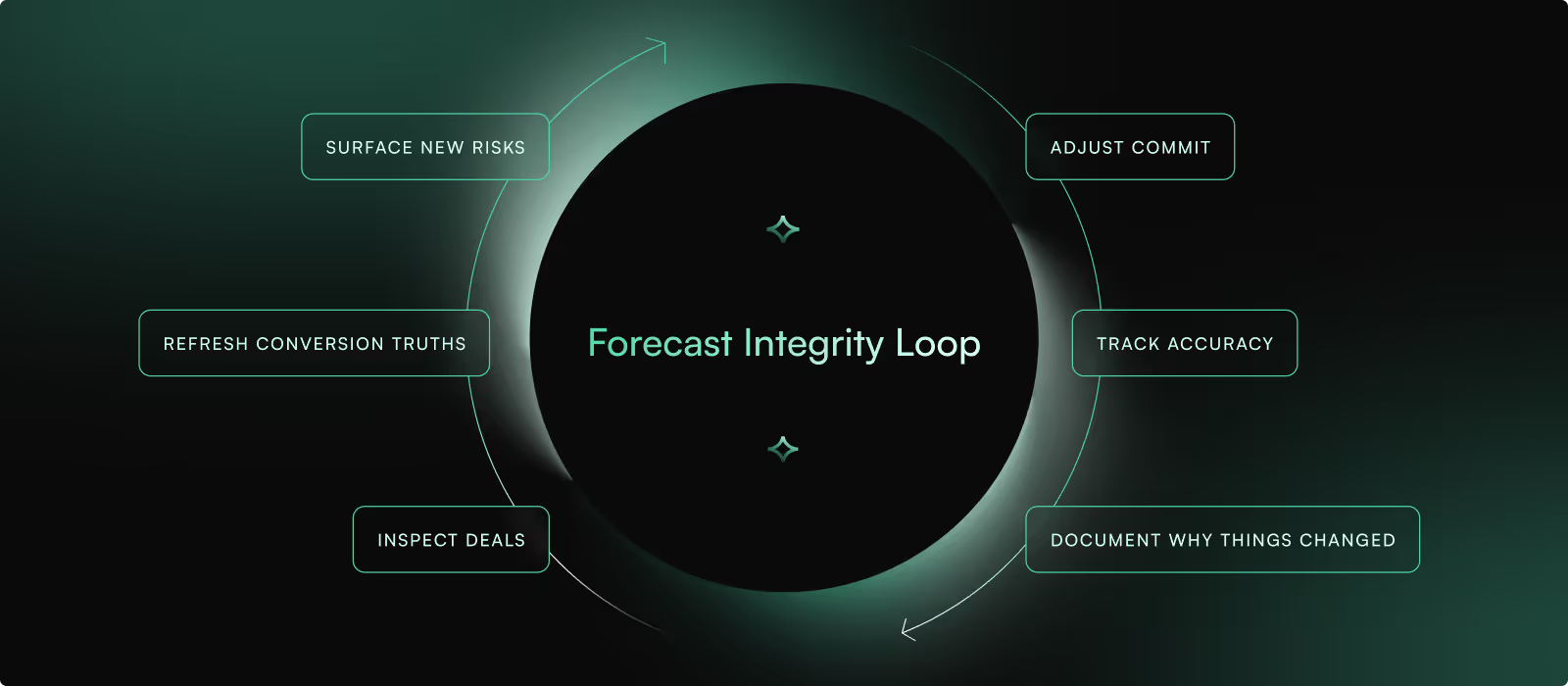

The Forecast Integrity Loop: How Great Operators Keep Forecasts Honest

Every strong forecasting culture shares one habit they run a consistent loop.

We call it the Forecast Integrity Loop, and it looks like this:

- Inspect the deals that matter

- Refresh conversion truths (not assumptions)

- Surface new risks

- Adjust commit

- Track accuracy

- Document why things changed

Most orgs skip steps four through six and that’s why their accuracy swings wildly.

AI tools like ForecastIQ reinforce this loop by making it nearly impossible to ignore the signals that matter. It’s not magic; it’s discipline supported by technology.

The Weekly Rituals That Make or Break Accuracy

The truth nobody likes to admit is Forecast accuracy is less about intelligence and more about habits.

The teams that nail consistency:

- Inspect deals even when the number “looks fine”

- Remove deals from commit if they’re missing next steps

- Keep stage hygiene tight so the model can learn

- Update scenarios as behavior changes

- Don’t treat week-over-week movement as optional

This is why AI doesn’t replace people it keeps them honest.

When a deal hasn’t moved in 21 days, the model doesn’t shrug. It asks, “Do you really believe this is closing?”

And that question alone saves "quarters".

What Good Actually Looks Like (No Sugarcoating)

If you’re forecasting enterprise deals, a 15–25% in-quarter error rate is normal. Anything better is exceptional.

If you’re SMB or velocity, 10-15% is excellent.

Bias is the real enemy. Consistently over-forecasting erodes trust. Consistently under-forecasting destroys planning. Teams that monitor both WAPE and bias tend to stabilize faster and deliver more predictable revenue.

Over time, what feels like “accuracy” is simply this:

Your forecast stops surprising you. :)

Backtesting: The Part Everyone Forgets

Backtesting sounds complicated, but it’s really a post-game review.

You look at the last few quarters, compare what you predicted to what actually happened, and uncover the patterns your brain smoothed over.

Backtesting exposes the uncomfortable truths:

- Your win rates aren’t what you think

- Some segments convert very differently

- Your commit criteria isn’t real

- Your stage definitions encourage bad behavior

It’s humbling and liberating. It sets the stage for everything that follows.

Explainability: The Non-Negotiable Requirement

No CRO will trust a model that cannot explain itself. Explainability has become essential. It is the difference between a forecast that earns confidence and one that feels like a mysterious black box handing down numbers from above.

A good forecasting system should feel like a conversation, not a verdict. Leaders should quickly understand what changed since last week, which signals drove that shift, and what assumptions were updated.

They should also be able to see whether a probability drop came from declining engagement or simply from stage aging.

This level of transparency is what allows leaders to trust the forecast, challenge it when needed, and ultimately improve their process. ForecastIQ is built around this philosophy. AI insights matter only when leaders can interpret them clearly and confidently.

How Forecasting Differs Across Industries

Forecasting is not a one size fits all discipline. It looks very different across SaaS subscription businesses, consumption models, hybrid hardware motions, and services organizations.

Subscription SaaS depends heavily on adoption and stakeholder engagement. These signals often determine renewal and expansion long before a contract is up for review.

Consumption SaaS is shaped by acceleration or slowdown in usage, which tends to predict renewal outcomes more accurately than CRM fields ever can.

Hybrid hardware plus SaaS introduces deployment complexity and technical readiness, something most forecasting systems were never built to interpret.

Services teams operate in an entirely different rhythm, where resource allocation, delivery milestones, and stakeholder satisfaction determine outcomes.

AI does not erase these nuances. What it does is finally give companies a way to model them accurately.

A Real World Example: What It Looks Like When This Actually Works

A publicly traded SaaS company, roughly six hundred million in annual revenue, reduced its forecast error from thirty four percent to twelve percent in a single quarter. This improvement did not come from a radical transformation or a complicated technical overhaul.

It came from consistency.

The team cleaned up segmentation and tightened its exit criteria. They enforced next step discipline, ensuring every deal had real momentum. They tracked executive access, recognizing how important it is for enterprise selling.

They refreshed win rates monthly instead of relying on outdated assumptions. They adopted ForecastIQ to surface risk earlier and bring objectivity to deal inspection. And only once this operational foundation was solid did they layer in AI driven signals.

The result was a forecast that finally matched reality. Trust in the process grew, and the AI amplified that trust even further.

The Problems That Sabotage Forecasts (And How to Fix Them)

Every RevOps leader has seen the same issues repeat themselves. Stages drift out of alignment with what is actually happening. Close dates slip week after week without accountability. Large deals distort the forecast. The model feels confusing or unpredictable. Managers push back against AI scores because they conflict with their intuition.

Fortunately, the fixes are straightforward. They begin with strong operational habits.

Make next steps and activity updates mandatory so deals stop sitting idle. Require clear reasons for date changes to eliminate sandbagging and optimism bias. Use scenario ranges to reduce volatility created by a few oversized deals.

Introduce AI gradually and explain how it reads signals. Treat its recommendations as a second opinion rather than a final ruling.

These practices are not new, but AI reinforces them by making it impossible to ignore the truth inside the pipeline.

Which Tools Work Best (Honest, Not Political)

Choosing a forecasting tool is not about comparing feature checklists. It is about understanding the needs of your revenue engine and matching them with the right system.

MaxIQ, which brings together ForecastIQ, InspectIQ, and EchoIQ, is ideal for teams that want forecasting grounded in real buyer signals, simple governance, and a connected workflow that does not require heavy operational overhead.

Clari is a strong fit for large organizations with complex roll up structures and multiple forecasting layers.

Aviso works exceptionally well for companies with deep data science maturity that want heavy machine learning modeling behind their forecasts.

BoostUp is a good choice when email and activity insights are as important as pipeline dynamics.

Salesforce Einstein offers convenience for teams that want native predictions directly inside their CRM, though it typically provides less signal depth than specialized forecasting platforms.

No tool can save a broken process. But when paired with strong habits, the right system accelerates forecasting maturity dramatically.

How to Roll Out AI Forecasting in Four Weeks

This process doesn’t need to take quarters. Most teams get to a stable flow in a month.

Week 1: Align on goals, definitions, commit rules, and fields

Week 2: Clean up data and recalc win rates

Week 3: Run a trial on last quarter’s activity

Week 4: Go live and freeze logic

After that, the habits take over.

How MaxIQ's ForecastIQ Brings It All Together

ForecastIQ isn’t just a model it’s the forecasting system RevOps leaders wish existed years ago.

It brings together:

- Real buyer signals from EchoIQ

- Deal inspection with InspectIQ

- Honest stage calibration

- Built-in best/base/worst scenarios

- Accuracy dashboards

- A complete “who changed what, when, why” history

And it lets teams grow into AI rather than being forced into a black-box model on day one.

That’s the part revenue leaders appreciate most: You stay in control. The AI just keeps everything honest.

Join 1,000+ leaders who get our latest revenue intelligence trends before anyone else.

Ready to see MaxIQ in action? Get a demo now

.avif)

.svg)